Stephen Wolfram is talking about the Ruliad, Micheal Levin is talking about Platonic Space. They sound very similar… 🤔

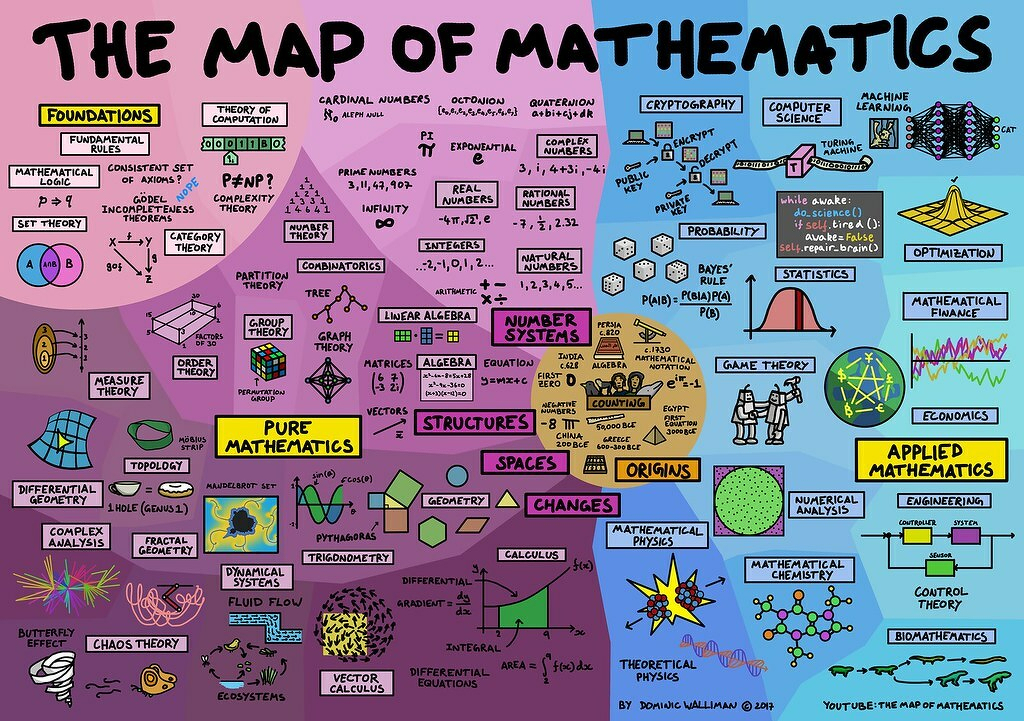

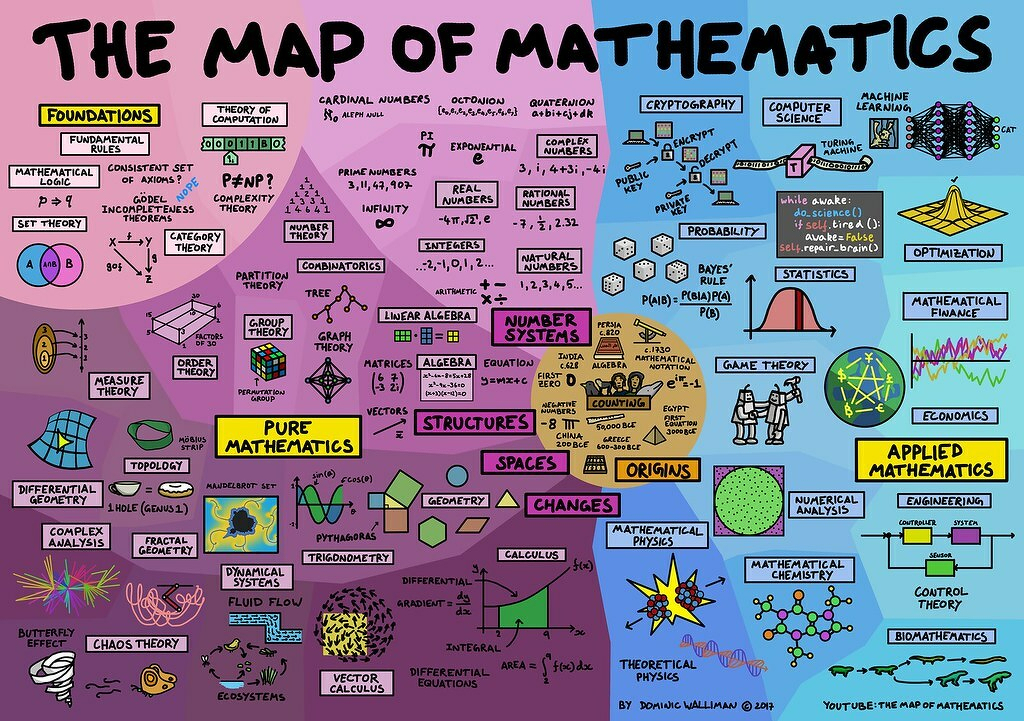

And here’s the language of the space (any space!) 🤓

Stephen Wolfram is talking about the Ruliad, Micheal Levin is talking about Platonic Space. They sound very similar… 🤔

And here’s the language of the space (any space!) 🤓

Positive experience with Claude Code on the web for a small change, will it offer value for bigger changes??

Exciting day today - this quote captures it well.

“The most important thing in life is to be true to yourself. Because if we’re not true to ourselves, then how can we be true to life?”

Business, innovation, father, husband, son, brother, and friend.

Meditator, artist, and warrior.

Wow, I think I’ve just turned the final straight on my paper. It is both, exactly what I thought it’d be and completely new to me. Writing down my intuition, with as much rigour as I can, is a great experience. Now I find out that someone else has already done something similar and I’ve just not understood or found what they have said! Still a great experience to have worked to this point and understand it.

An explanation of the getKeys operator in the Introduction to AI Planning paper from Marco Aiello and Ilche Georgievski (16 Dec 2024).

The more ways a system can achieve a function, the more robust and adaptable it becomes. I think it is fair to say we tend to think of “degenerate” as a pejorative. Something broken, collapsing, or inferior. But in complex systems — biological, neural, or artificial — degeneracy means something far more interesting: different structures performing similar functions.

Generalisation… I am comparing State Spaces and Solution Spaces and realised that I may be talking about generalisation…. The post is diving into the definitions to prompt some thought.

Planning is offline search.

Planning is offline search.

Planning is…. Yup, it is offline search.

This guide outlines the steps to build the offline speech-to-text application Handy from source on an Intel Mac, including installation of necessary tools and troubleshooting common issues.

Agentic Context Engineering (ACE): Self-Improving LLMs via Evolving Contexts, Not Fine-Tuning

Interesting concept but lacks rigour, so feels like the legend of the boy and the dike

There are better ways to manage context.

"Domain Modelling is itself the process of learning, you cannot know it all at the start, and should expect to update aspects at any stage of the product development".

Do I need patience to learn to direct coding agents or is it time to learn a new language to learn and develop in that?

This is about connection - both with a fellow human interested in and articulate about Artifical Intelligence and the connection of the information inputed, processed, and produced. The Information - LLM-as-a-Judge - we chat about the survey paper and how it can be applied to modern AI Applications. There’s a human written blog post, a Youtube video, and a NotebookLM to chat to. Fill your boots :)

Google Meet, Youtube, and NotebookLM make for great research utilities.

Some great comments on pushing deeper into the tech stack, get closer to GPUs, and keep your eyes open, the next thing can come from anywhere.

Getting into the Paris AI Engineer conference spirit with a new Avatar for the week!

What is an AI Engineer?

Think like a data scientist, build like a software engineer.

Thanks to Jason Mumford for this definition.

Claude Code is monitoring file changes - this is good (I think!!)

⏺ Excellent! I can see you’ve already started updating the documentation files. You’ve successfully changed reasoning_parameters to agent_parameters in the domain model, data model, and agents files.

Finished reading: Dune: The Machine Crusade by Brian Herbert 📚

Great book - interesting character development, was a bit of a weird shift into the final parts but ended well.

Some interesting challenges in terms of accepting the way machine intelligence works. Very symbolic based and no understanding of emotions which made for a nice story, it works well in the canon of the book.

I’ve been diving into the current thinking around consciousness. Turing sidestepped the question of machines having consciousness with the Turing Test - something that kept the question at a distance until ChatGPT! The view that consciousness is from natural language and can be defined with natural language is one that does not sit easy with me. In the video below Susan Schneider goes into some detail on that aspect, the personal benefit is that I’ve come to the view that the Hard Problem of Consciousness is not a problem as it does not exist.