[IA Series 3/n] Intelligent Agents Term Sheet

Introduction

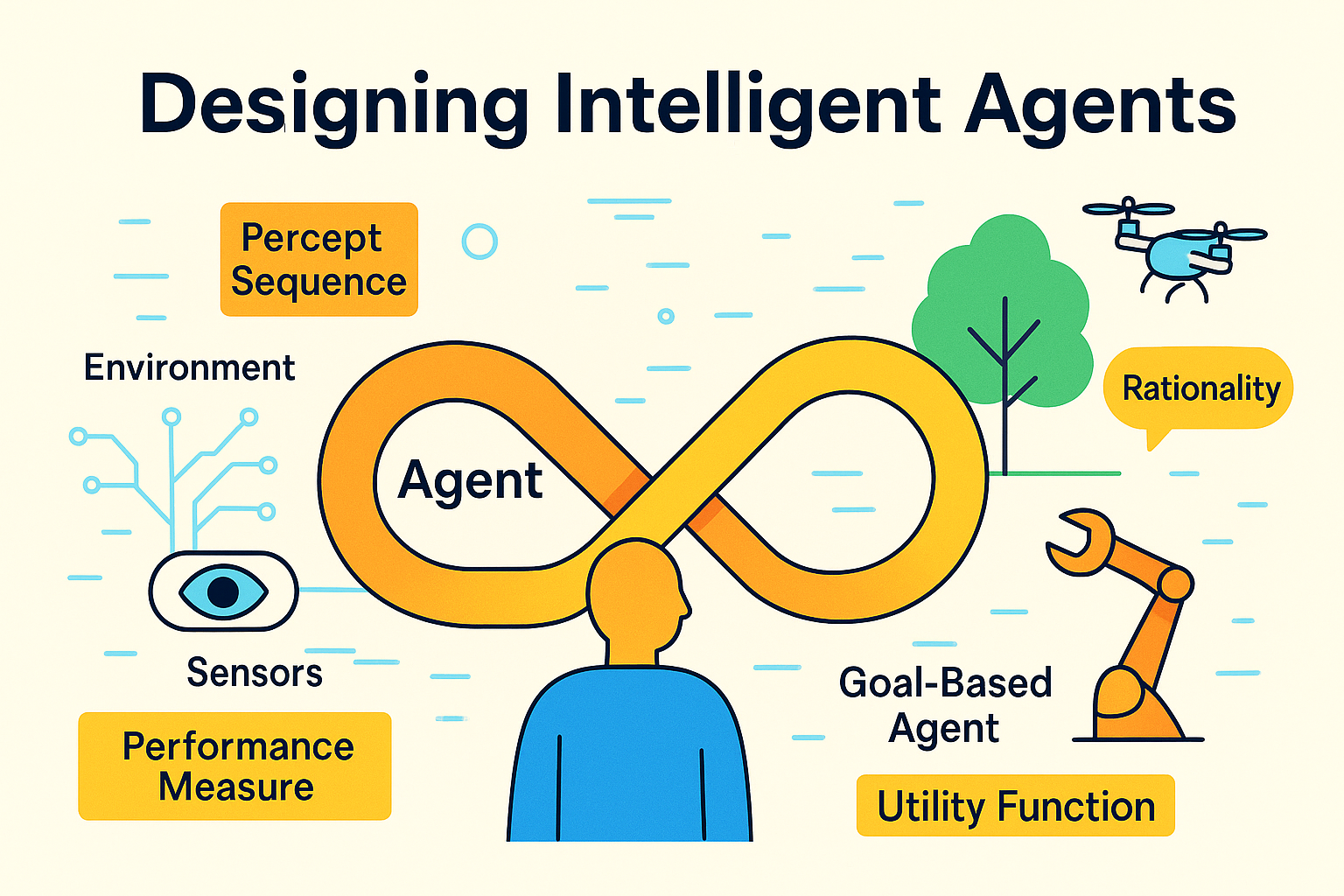

This could have been the first in the series, but Search is such a big and practical topic that it took precedence. Understanding the terminology of Intelligent Agents requires a solid foundation in theory and common vocabulary, and it’s taken considerable reading to grasp the significance of these definitions—particularly Rationality, which serves as the linchpin allowing us to design and measure Intelligent Agents effectively.

Most of these terms come from Russell and Norvig’s influential ‘Artificial Intelligence: A Modern Approach,’ first published in 1995 and now in its fourth edition. My contribution is modest: I’ve found it helpful to label certain metrics as ‘irrational’ to explain unexpected agent behaviour, and I’ve compiled the book’s approach into an Agent Design Process at the end.

In the next post, I’ll explore characteristics of agents that mirror human and animal traits—behaviour, desires, beliefs, knowledge, and promises. For now, I’m focusing on Core Terms and a Design Process. I’ve kept these separate to emphasise the importance of the terminology below, which provides tangible and common concepts applicable to most (possibly all) agent types.

Core Terminology

- Agent: An entity that perceives its environment through sensors and acts upon that environment through actuators to achieve goals.

- Environment: The external world or setting in which an agent operates and with which it interacts.

- Sensors: how the agent perceives the environment

- Actuators: how the agent acts upon the environment

- Percept: A single unit of perception or input that an agent receives from its environment at a given moment.

- Percept Sequence: The complete history of everything the agent has perceived up to the current moment.

- Action: What an agent does in response to a percept. The actions an agent can perform represent its capacity to change its environment.

Performance and Rationality

- Performance Measure: Evaluates the behaviour of the agent in an environment. As a general rule it is better to design performance measures according to what one actually wants in the environment, rather than according to how one thinks the agent should behave.

- Rationality: Used to define the impact to the environment. A rational agent acts to achieve the best expected outcome based on its goals.

- Rational Agent: For each possible percept sequence, a rational agent should select an action that is expected to maximise its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has.

- Irrational Performance Metrics: (My definition to help understand why an agent may act irrationally) Metrics that can lead to undesirable or counterproductive behaviour when used to evaluate agent performance.

Agent Architecture

- Agent Function: Describes agent’s behaviour by mapping any given percept sequence to an action. A mathematical mapping from percept sequences to actions.

- Agent Program: An internal implementation of the agent function for an artificial agent. The actual implementation that runs on the agent’s physical architecture.

- Task Environment: The description of Performance, (Task) Environment, Actuators, and Sensors (PEAS). This provides a complete specification of the problem domain.

Environment Classification

The Task Environment Properties:

- Fully vs. Partially Observable: Whether the agent can see all relevant aspects of the environment

- Deterministic vs. Stochastic: Whether actions have predictable effects

- Static vs. Dynamic: Whether the environment changes while the agent is deliberating

- Discrete vs. Continuous: Nature of states, time, and actions

- Single-agent vs. Multi-agent: Number of agents in the environment

Agent Type Taxonomy

Basic Agent Types

- Simple Reflex Agent: Acts only on current percept, maintains no internal state

- Model-Based Reflex Agent: Maintains internal state to track aspects of the environment

- Goal-Based Agent: Uses explicit goals to guide action selection beyond immediate rewards

- Utility-Based Agent: Selects actions to maximise a utility function that captures preferences over different outcomes

- Learning Agent: Improves performance through experience and feedback

Specialised Agent Classes

- Knowledge-Based Agents: Use explicit logical representation and formal inference mechanisms. Typically implemented as sophisticated model-based agents using propositional or first-order logic.

- Problem-solving agents: a type of goal-based agent which uses atomic representations, states of the world are considered as wholes, with no internal structure visible to the problem-solving algorithms.

- Planning Agents: another type of goal-based agents which use more advanced factored or structured representations.

Implementation Categories

- Human Agent: A person who acts as an agent

- Robotic Agent: A physical machine with sensors and actuators

- Software Agent: A program operating in digital environments

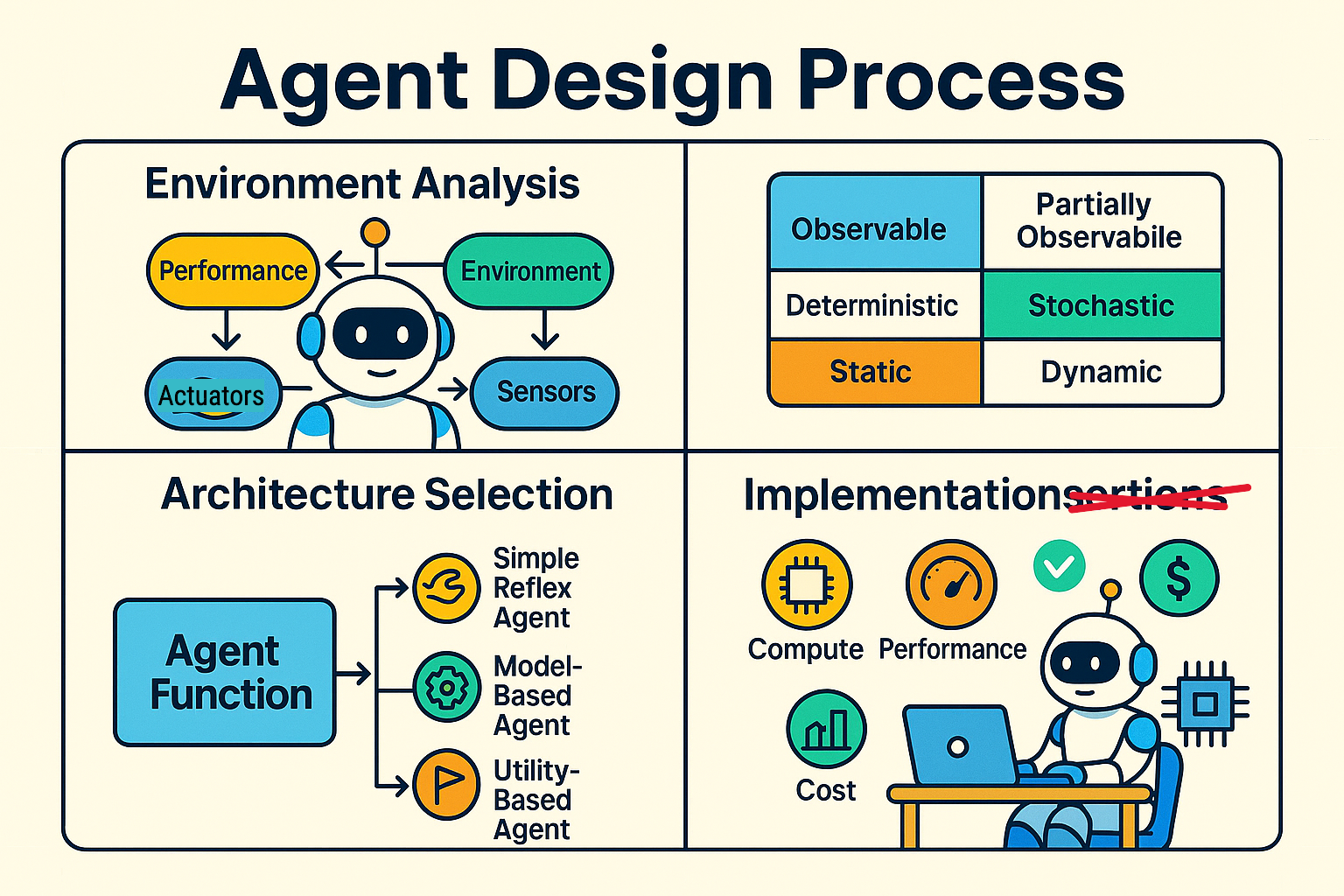

Agent Design Process

Environment Analysis

- Environment Specification: Specify the task environment using the PEAS framework (Performance measure, Environment, Actuators, Sensors)

- Environment Analysis: Determine the properties of the Task Environment (observable, deterministic, static, discrete, single/multi-agent)

Architecture Selection

- Agent Function: Define the ideal behaviour - what the agent ought to do - in abstract terms (mathematical mapping from percept sequences to actions)

- Agent Type Selection: Choose appropriate agent architecture (simple reflex, model-based, etc.) capable of implementing the agent function

Implementation Considerations

- Agent Program: Implement the chosen architecture within physical constraints (compute availability, performance vs cost, etc.)

Finally a ChatGPT Generated view of the process, it’s nearly right!