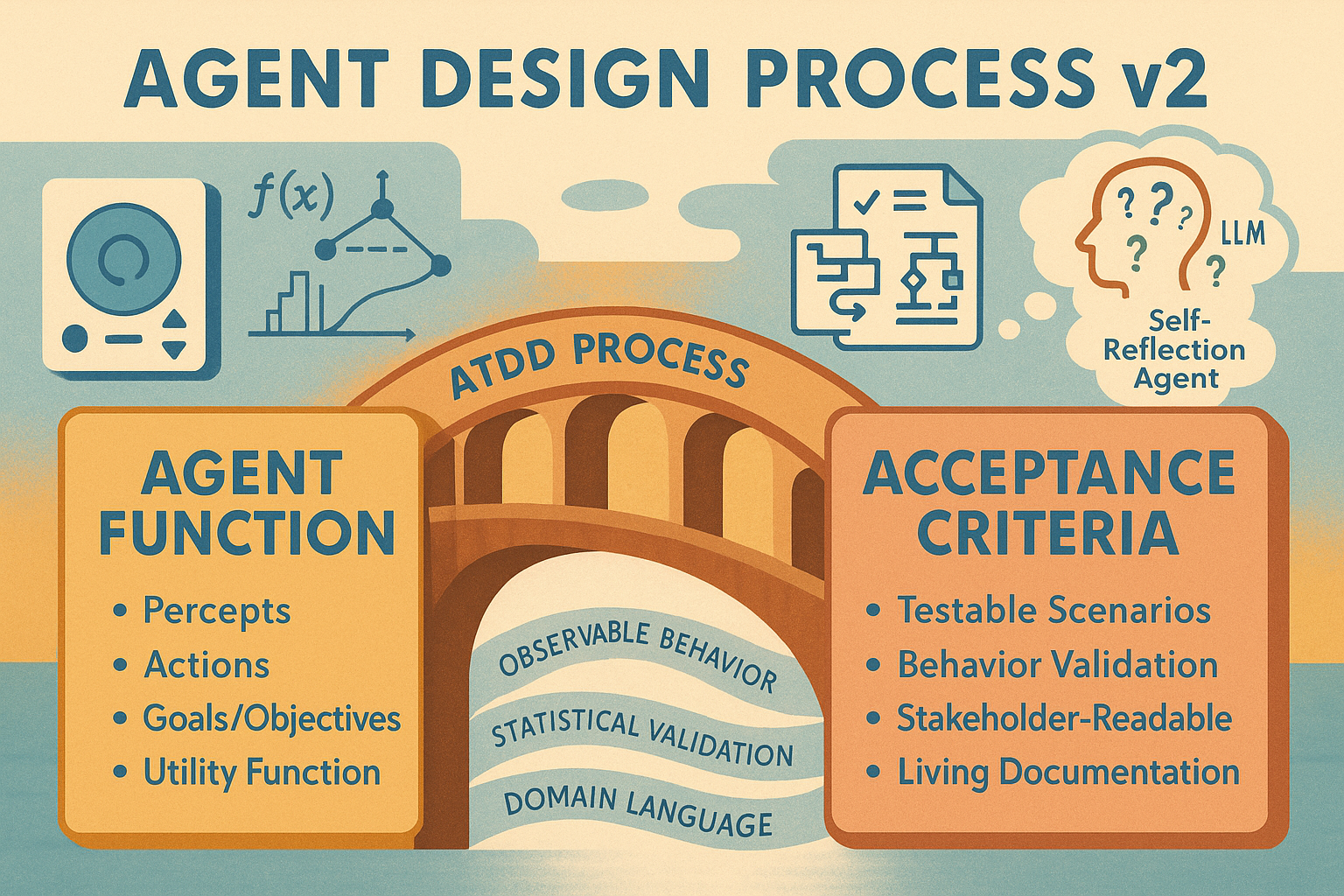

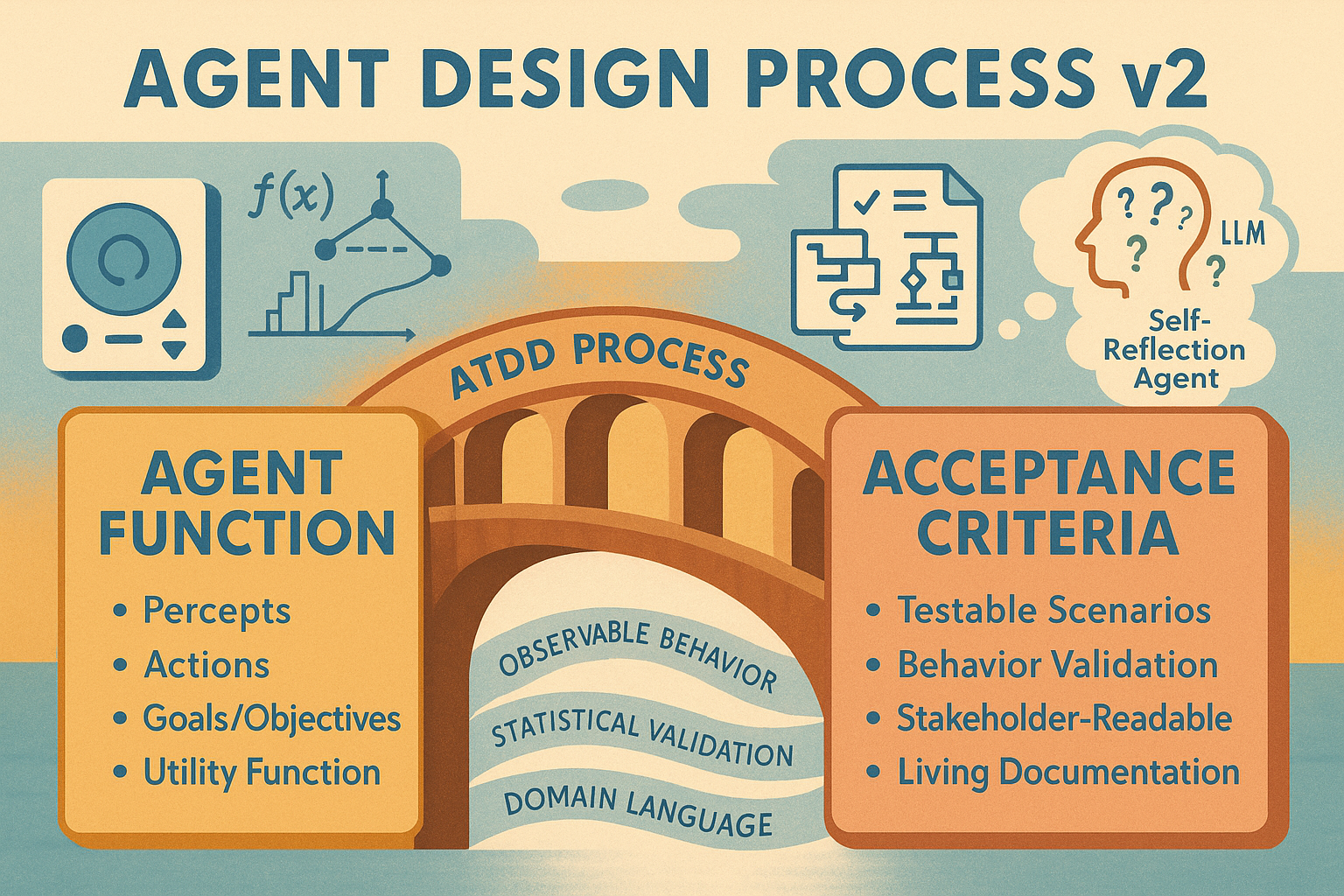

[IA 9] Agent Design Process v2: Bridging the Agent Function and Acceptance Criteria

Introduction

In studying Russell and Novig’s AI a Modern Approach I created a term sheet to provide guidance on the theory and vocabulary. At the end I created the first version of an Agent Design Process, based on my interpretation of their work.

In the months following I’ve applied this process to building a Self-Consistency agent and a Self-Reflection agent. During this process I’ve used Claude Code progressively more and have investigating “Vibe Coding” more (Lazy Vibe Coding isn’t good) and more (be clear with architecture and testing).

There is a way to connect the Agent Design Process and quality Software Craftmanship that means Agents can be built with the Agent Function defining not just what the Agent Program should do but with the Agent Function also defining the Acceptance Tests.

Acceptance Test Driven Development (ATDD)

Wikipedia is a great resource here I include some quotes from the page on ATDD and notes one where this application differs:

What is ATDD?

Acceptance test–driven development (ATDD) is a development methodology based on communication between the business customers, the developers, and the testers.

ATDD and TDD:

ATDD is closely related to test-driven development (TDD). It differs by the emphasis on developer-tester-business customer collaboration. ATDD encompasses acceptance testing, but highlights writing acceptance tests before developers begin coding.

Testing strategy:

Acceptance tests are a part of an overall testing strategy. They are the customer/user oriented tests that demonstrate the business intent of a system. Depending on your test strategy, you may use them in combination with other test types, e.g. lower level Unit tests, Cross-functional testing including usability testing, exploratory testing, and property testing (scaling and security).

In the application of ATDD in the Agent Design Process it is not a direct relationship between the business customers, rather between steps in the design process. The use is to define what the agent should do in a format that can be tested via automation.

Format:

The format follows the Given When Then and builds on the XP/Agile approach of Specification by Example - Martin Fowler has posts on both, here is a snippet from his Given When Then post.

Given-When-Then is a style of representing tests - or as its advocates would say - specifying a system’s behavior using SpecificationByExample. It’s an approach developed by Daniel Terhorst-North and Chris Matts as part of Behavior-Driven Development (BDD). (In review comments on this, Dan credits Ivan Moore for a significant amount of inspiration in coming up with this.)

Simplified Example: Thermostat Agent

To illustrate how ATDD works for agents, let’s start with a simple thermostat agent that everyone can relate to. For those interested a thermostat agent is a Simple Reflex Agent, it has no state and takes actions on percepts.

Step 1: Define the Agent Function

A thermostat agent’s function is: “Monitor temperature and control heating to maintain comfort”

- Percepts: Temperature readings, target temperature setting

- Actions: Turn heating on, turn heating off, do nothing

- Goal: Maintain temperature close to target

Step 2: Write Given-When-Then Specifications

Here are acceptance tests in plain English that anyone can understand:

Specification 1: Basic Temperature Control

Given: Target temperature is 20°C and current temperature is 15°C

When: Thermostat agent checks the temperature

Then: Heating is turned on

Specification 2: Maintain Temperature

Given: Target temperature is 20°C and current temperature is 21°C

When: Thermostat agent checks the temperature

Then: Heating is turned off

Specification 3: Avoid Rapid Switching

Given: Target temperature is 20°C and current temperature is 19.5°C

When: Thermostat agent checks the temperature

Then: Heating remains in its current state (hysteresis)

Key Points of this Example

- The specifications are understandable - A homeowner, engineer, or tester can all understand what the thermostat should do

- Tests focus on behavior - We test WHAT the thermostat does (turn heating on/off), not HOW it decides

- No implementation details - The tests don’t mention algorithms, thresholds, or internal state

- Clear percept→action mapping - Temperature reading (percept) leads to heating control (action)

This same approach scales to more complex agents like the Self-Reflection agent, where:

- Percepts: Questions and LLM responses

- Actions: Query more LLMs or return an answer with confidence

- Goal: Provide accurate answers while minimizing computational cost

Defining Acceptance Criteria for Specific Agents

Before writing acceptance tests for a specific agent, it’s crucial to establish a systematic approach that bridges the theoretical Agent Function with practical, testable criteria. Here are the key aspects to consider:

1. Start with the Agent Function Specification

The Agent Function defines what the agent ought to do - this is your source of truth. Before writing any tests, clearly understand:

- What percepts does this agent receive?

- What actions should it take in response?

- What is the mapping logic between percepts and actions?

- What is the agent’s objective or utility function?

For example, a Self-Reflection agent’s function might be: “Map question + LLM responses → answer with confidence assessment, optimizing for accuracy while minimizing computational cost.”

2. Identify Observable Behaviors (Not Implementation)

ATDD tests should focus on externally observable behaviors, not internal mechanics:

- ❌ Bad: “The agent calculates entropy using Shannon’s formula”

- ✅ Good: “The agent demonstrates uncertainty awareness in its responses”

The test should validate WHAT the agent does, not HOW it does it internally.

3. Define Success Without Predetermined Outcomes

This addresses the “cheating” problem where tests use specific data to force expected results:

- ❌ Bad: “Given answers [‘A’,‘A’,‘B’,‘B’], the agent returns ‘binary’ consensus”

- ✅ Good: “Given responses with equal split between two answers, the agent recognizes high uncertainty”

Use property-based criteria that work with any valid input, not just carefully crafted test cases.

4. Consider the Agent’s Decision-Making Context

Different agent types have different decision contexts that should inform your acceptance criteria:

- Reflex agents: Direct percept → action mapping

- Model-based agents: Maintain internal state

- Utility-based agents: Optimize expected utility

- Learning agents: Improve over time

Your acceptance criteria should match the agent type’s theoretical capabilities.

5. Handle Probabilistic and Emergent Behaviors

LLM-based agents often exhibit non-deterministic behaviors. Acceptance criteria should:

- Allow for variability in specific outputs

- Focus on statistical properties over multiple runs

- Define acceptable ranges rather than exact values

- Test invariants that should always hold regardless of randomness

6. Translate Theoretical Requirements to Testable Behaviors

This is the core challenge of ATDD for agents. For each theoretical requirement:

- Ask: “How would I know if the agent is doing this?”

- Define observable evidence

- Create scenarios that would reveal the presence/absence of the behavior

Example translation:

- Theory: “Agent balances accuracy vs computational cost”

- Observable: “Agent stops querying when confidence is sufficiently high”

- Test: “Given high consensus early, agent uses fewer queries than maximum allowed”

7. Avoid Testing Accidents of Implementation

Don’t test things that could change without affecting the agent’s function:

- Specific data structures used internally

- Exact threshold values (unless they’re part of the specification)

- Order of internal operations

- Performance characteristics (unless explicitly specified)

Focus on the contract, not the implementation details.

8. Consider the Full Percept-Action Cycle

Comprehensive acceptance tests should cover:

- Initial percept processing

- Internal state updates (if observable through actions)

- Decision making under various conditions

- Action selection and execution

- Handling of edge cases and errors

Each test should tell a complete story from percept to action.

9. Define Clear Boundaries

Establish what is in scope vs out of scope for this agent’s acceptance criteria:

- Which behaviors are essential vs nice-to-have?

- What assumptions can we make about the environment?

- What quality attributes matter (speed, accuracy, robustness)?

- What failure modes are acceptable?

Clear boundaries prevent scope creep and over-specification. Struggling to define clear boundaries is a great indicator that there is too much complexity within you system or approach. This links in to later approaches like Domain Driven Design and SOLID.

10. Make Tests Meaningful to Stakeholders

ATDD is fundamentally about communication. The tests should:

- Use domain language, not implementation jargon

- Clearly show the value the agent provides

- Be understandable by non-technical stakeholders

- Demonstrate the agent meets its intended purpose

These tests serve as living documentation of what the agent is supposed to do.

Next

Next I’m going to walk the talk and retrospectively implement this for the Self-Reflection agent. Exciting eh!! ;-)