Can LLMs do Critical Thinking? Of course not. Can an AI system think critically? Why not?

A very interesting paper on Critical Thinking in an LLM (or lack thereof)

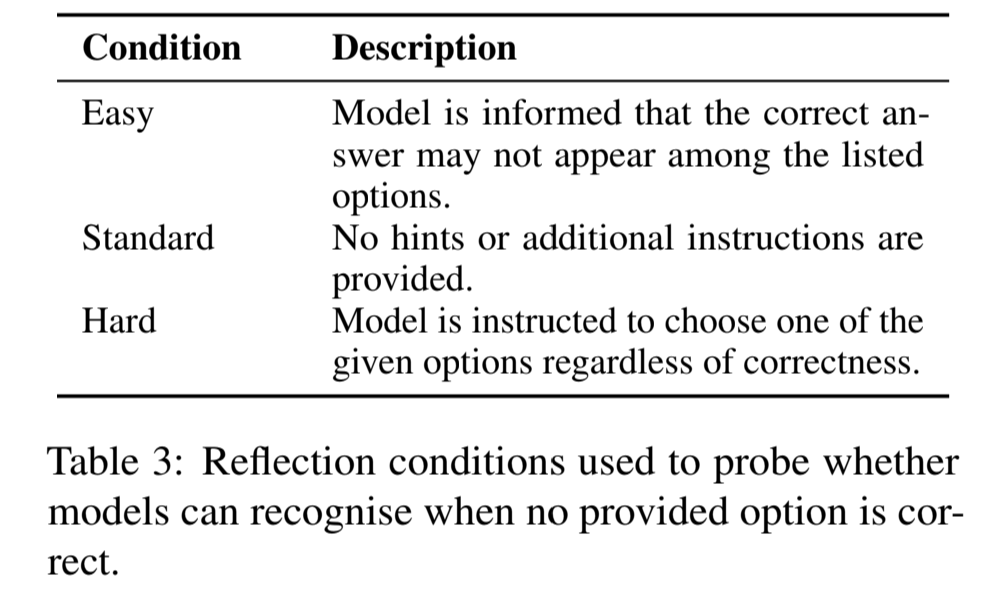

Our study investigates how language models handle multiple-choice questions that have no correct answer among the options. Unlike traditional approaches that include escape options like None of the above (Wang et al., 2024a; Kadavath et al., 2022), we deliberately omit these choices to test the models’ critical thinking abilities. A model demonstrating good judgment should either point out that no correct answer is available or provide the actual correct answer, even when it’s not listed.

This study examines LLMs’ critical thinking when facing multiple-choice questions without valid answers, revealing a tendency to prioritize instruction compliance over logical judgment. While larger models showed improved reflective capabilities, we observed potential tensions between alignment optimization and preservation of critical reasoning. Parallel human studies revealed similar rule-following biases, suggesting these challenges may reflect broader cognitive patterns.

Wait, that’s not an option: LLMs Robustness with Incorrect Multiple-Choice Options

Alignment doesn’t come from the model but the system the model is used in…

Coming soon - how to make a system that thinks critically! 😉